If you sit on an institutional review board (IRB) or other research ethics committee, you need to know how to assess projects that use electronic forms of data collection. If you’re accustomed to paper data collection, you want to know what new questions to ask when it comes to electronic methods. Rest assured that data confidentiality with electronic data collection can actually be substantially safer than paper… if handled properly.

This post discusses a series of questions that a committee safeguarding human subjects should ask of projects employing electronic data collection, as well as the options and underlying issues involved. The goal is to keep data safe and secure.

1. What data will be collected?

You’ll want to know, of course, the exact questions that will be asked of any respondent. But electronic devices can collect data in a variety of formats. Will the project collect photos, audio, or video? If so, of whom or of what? Will the project collect GPS locations? Audio recordings or GPS locations are often used for quality control during data collection, and those count as collected data.

2. How sensitive is the data that will be collected?

Universities typically have a classification system identifying different levels of sensitivity (see a specific example from Harvard here, and a more general interpretation here). Sensitivity is judged by the level of perceived harm that could come from a leak of the data. Sensitive data is also called Personally Identifiable Information (PII). PII includes information that directly identifies someone (e.g., name, address, identifying image, GPS point of the home) or information that could be linked to someone, causing harm to that person if it is released (e.g., financial information or health records). The greater the estimated harm from the accidental release of PII, the greater the level of sensitivity of that data. More sensitive electronic data will require tighter security measures.

3. Will the data-collection devices be password-protected? Who will have access?

Most devices have a password protection option. Ask specifically who will have the passwords, and what they can access with that password. For example, you may want a separate password for the research manager with greater access, and another password for the enumerator, who can only access the application for entering data into the device (but not see any previously entered data).

4. How will informed consent be handled?

Respondents should typically be informed of the full range of data-collection activities to which they are consenting, including the capture of GPS location, audio, video, or photos (if any). Respondents should be able to refuse either in aggregate (i.e., refuse to participate), refuse to answer individual questions, or refuse to consent to particular forms of data capture (such as audio recordings or photos). Informed consent is typically recorded with a signature, though sometimes a simple verbal consent is allowed. If a signature is required, it could be captured electronically, with the respondent signing using a stylus or finger. If verbal, the consent could be audio recorded. If a physical signature is required, the research team might need to print out a special consent form.

5. Will data be encrypted once it is collected and saved to the device?

Encryption is the encoding of data so that no one but the holder of a “key” can read (decrypt) the data. It is analogous to keeping paper data collection forms in a locked cabinet. A public/private key combination is generated by security software, and the researcher is responsible for keeping the private key safe and secure. If given to someone else, they can access the data; if lost, no one can access the data and the data is effectively gone (so there is a risk: the researcher must be careful not to lose the private key). Encryption can typically be done on the device, once the survey form is complete. All collected data (including audio recordings, photos, etc.) might be encrypted, or only PII data might be encrypted, so that some less-sensitive, non-PII data is left exposed for easy monitoring or quality control by staff without the decryption key. As soon as data is encrypted, it is no longer readable to anyone with the device, unless they have the decryption key.

6. Who will have access to decrypted data?

Typically only the core researchers and necessary project personnel will ever have access to the full, decrypted dataset. These team members should be trained in Human Subjects – as is standard with any data collection – but it is also important that they be familiar with the technologies necessary to keep electronic data secure (e.g., password protection, encryption, and other methods described in this post). The full dataset, including PII, is rarely necessary to do most analysis, so a non-PII subset of the data may be separated and shared with a wider group. At the extreme, a non-PII version of the dataset may be eventually shared with the public. It’s important, however, that a non-PII version of the data be fully safe and not allowed to violate confidentiality; GPS locations, village names combined with household characteristics, and other data can in practice be used to identify particular respondents, so care must be taken in determining the non-PII subset.

7. What data will be used for quality control or survey tracking? And how?

Identifying information is often helpful for the data-collection team to have in real-time – for example, location information for the households that have or have not yet been surveyed. Specifically, GPS locations are commonly used to track survey logistics, to see where interviews have happened. Depending on where the survey happens, this may amount to sensitive information (e.g., the location of a respondent’s home).

It is important to know how the field and monitoring teams want to use this data. Will it be left unencrypted and published to a Google or other website for easy viewing? If so, what security measures will keep that data safe? If not, it is still possible to view maps with GPS coordinates – for example, using Google Earth on a local computer – but will such maps be viewed on an Internet-connected laptop, or on a “cold room” computer that is not connected to the Internet? All of these set-ups are possible with a data-collection system like SurveyCTO, and all of them have varying degrees of security (discussed in further detail below).

8. How will the data flow, exactly? Where will it go and how?

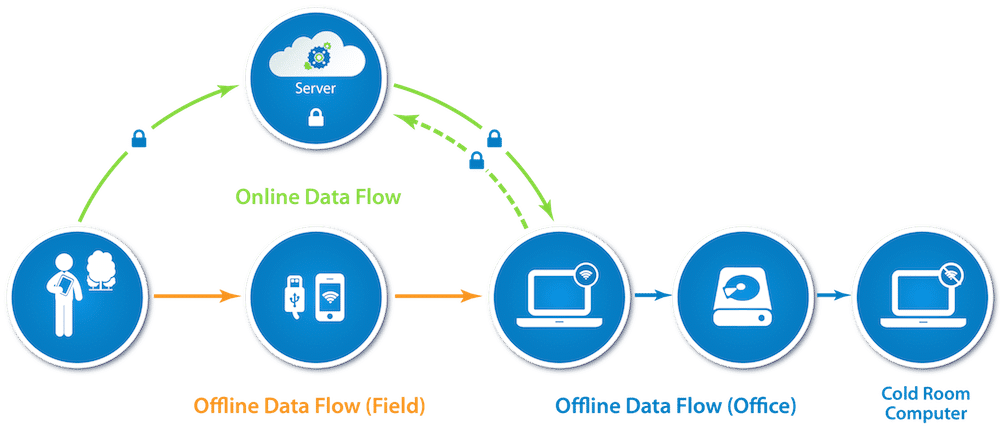

There are different ways the data can be transferred between devices, and the appropriate method should be chosen based on the sensitivity of the data. As mentioned above, it is possible that this will take place while the data collectors are in the field, away from the office. See the figure at the top of this post. There are three typical types of data flows, and you want to regulate the data’s encryption and security at each transit (marked by arrows) and storage point (marked by circles) in the flow.

- Online Data Flow (green in the figure): Data transits over the Internet and rests on a server between the device and a computer. Ensure the data is transferred through a secure channel over the Internet, and that the server itself is secure from unauthorized access (whether it be a physical or cloud-based server). If the data is encrypted with a private key only held by the research team, then it cannot be easily viewed on the web (e.g., with Google Spreadsheets or Fusion Tables for fieldwork tracking) – but in that case it is also fully safe both as it transits and as it sits on any intermediate servers.

- Offline Data Flow (orange in the figure): Data transits (possibly over USB, possibly over a local wi-fi network) from the device to a laptop. It may (optionally) be processed then through a server and returned to another laptop.

- Cold Room Set-up (blue in the figure): If a cold room computer is used, then encrypted data from either of the above flows would only be accessed after an additional transfer – to a computer that is never connected to the Internet – where that data is then decrypted. This is for the most sensitive data. The private key may be generated and only ever held on the cold room computer (with some safe key back-up system in case the cold room computer fails).

9. How will the data be stored? What will happen to it at the end of the storage period?

You’ll want to know all devices on which the data will appear readable, and ask about password protection and encryption while the data is sitting on those devices. This includes any data that is viewed in the field, and the final resting point of the dataset. It also includes any and all data back-ups. You’ll want to know what servers the unencrypted data may rest on, and be sure that those are fully secure. Finally, most data is only stored for a limited period of time. Ask how the project plans to destroy the electronic data after this time is up. Standard file deletions will not remove data completely; software to perform a complete data erasure might be required.

Safeguarding human subjects data

As an IRB or research ethics committee, it’s up to you to ensure that human subjects data is appropriately safeguarded. Hopefully this discussion has provided some sense of reasonable expectations for a project using electronic data collection. The key question is: What data needs to be protected and when? It’s up to you to decide the sensitivity of the data and set corresponding boundaries for a project.