We’ve noticed OpenAI’s ChatGPT taking the world by storm. Is it a good tool for users of SurveyCTO? Our product team investigates.

The promise of artificial intelligence to streamline, simplify, or even eliminate work is tantalizing — especially to those who work in industries already heavily impacted by technology.

At Dobility we’ve been paying keen attention to the explosion of ChatGPT and similar AI tools and technologies in the past few months. We’re tech enthusiasts, and so we’re always curious about new developments.

As survey platform innovators, we were eager to know how this new chatbot could potentially assist SurveyCTO users. We were also cautious. We know ChatGPT isn’t a tool designed for form programming or survey design. But we still wanted to explore potential applications for our platform and the survey design field.

In this article, we put ChatGPT to the test with some common form programming and survey design tasks. Keep reading to see the results and our final verdict.

What Is ChatGPT and can it be used in survey form programming?

ChatGPT is an artificial intelligence chatbot that uses natural language processing technology to understand human language queries and formulate responses. It is a large language model that produces text-based responses to questions and input, whether that means drafting a piece of written content, or generating an XML or XLSX file. While it has many interesting applications, it is not programming software, nor is it designed with survey programming or design in mind.

Given these capabilities, we first wanted to see what level of form programming ChatGPT could generate. We quickly learned that it does not have the ability to fully program surveys. However, it does have the ability to do some programming on a question-by-question basis.

We tested a few common form programming techniques to see how well ChatGPT performed.

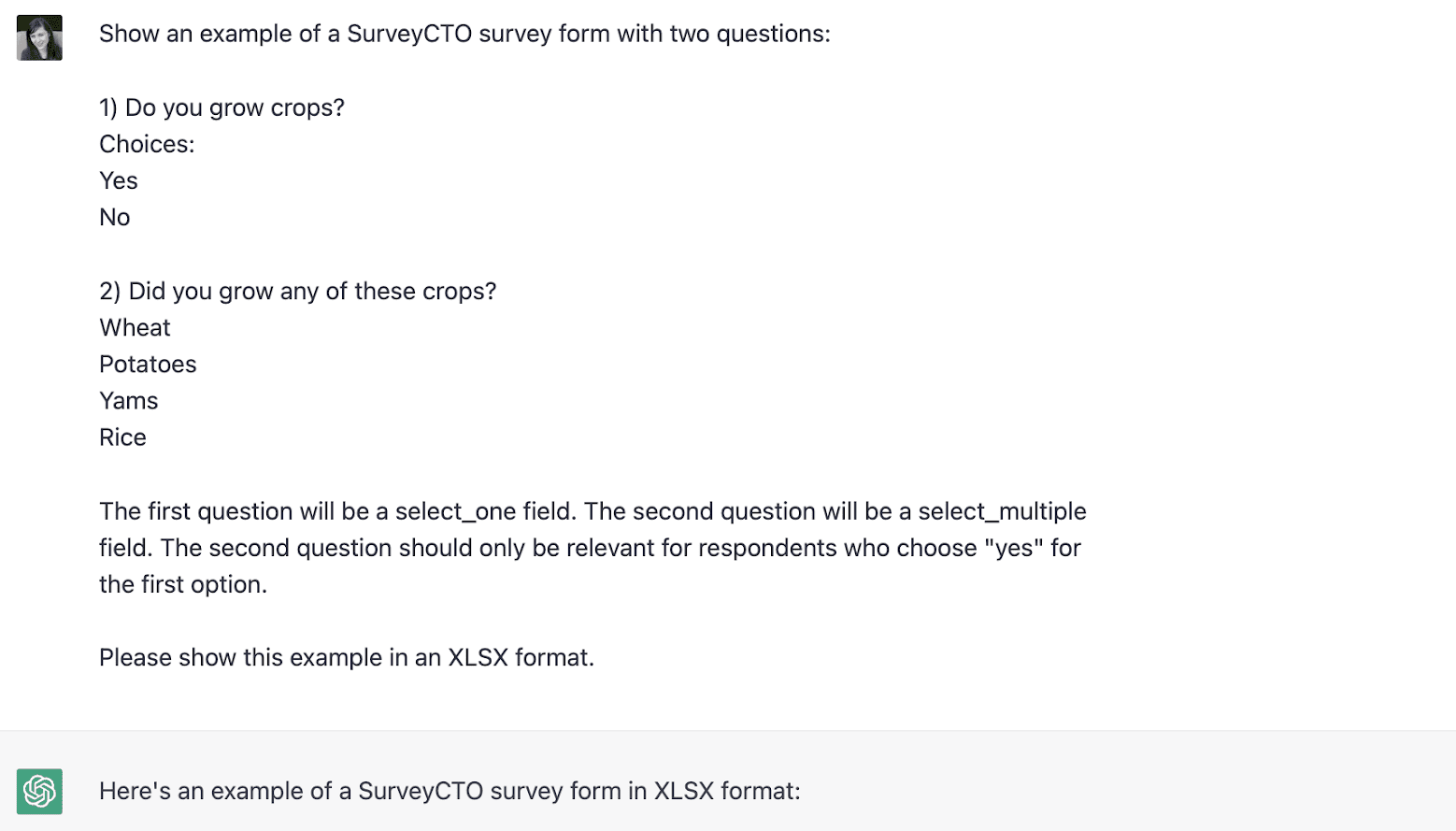

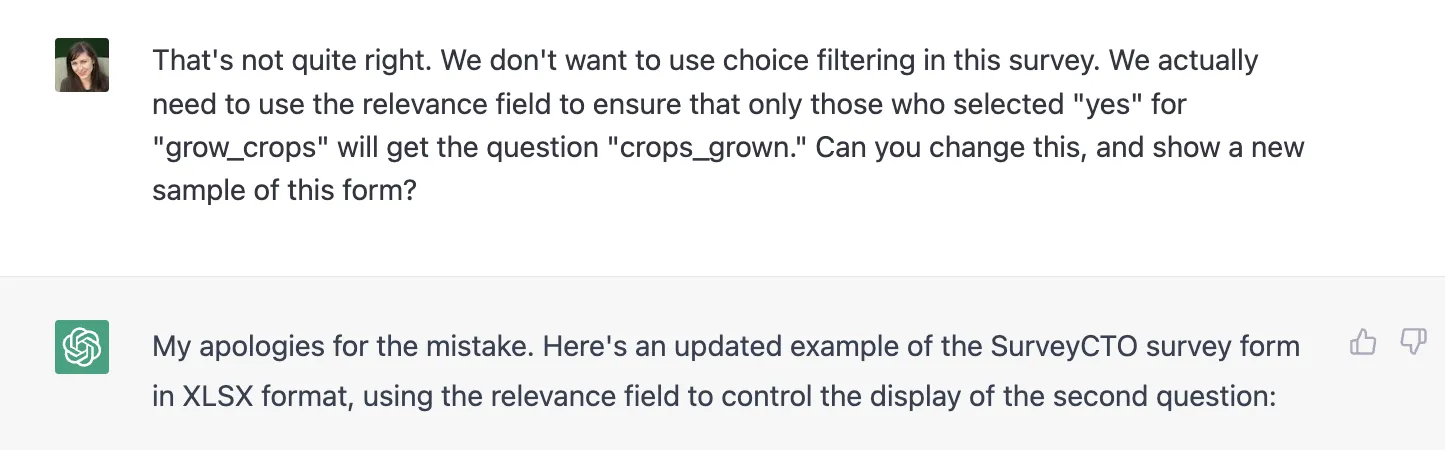

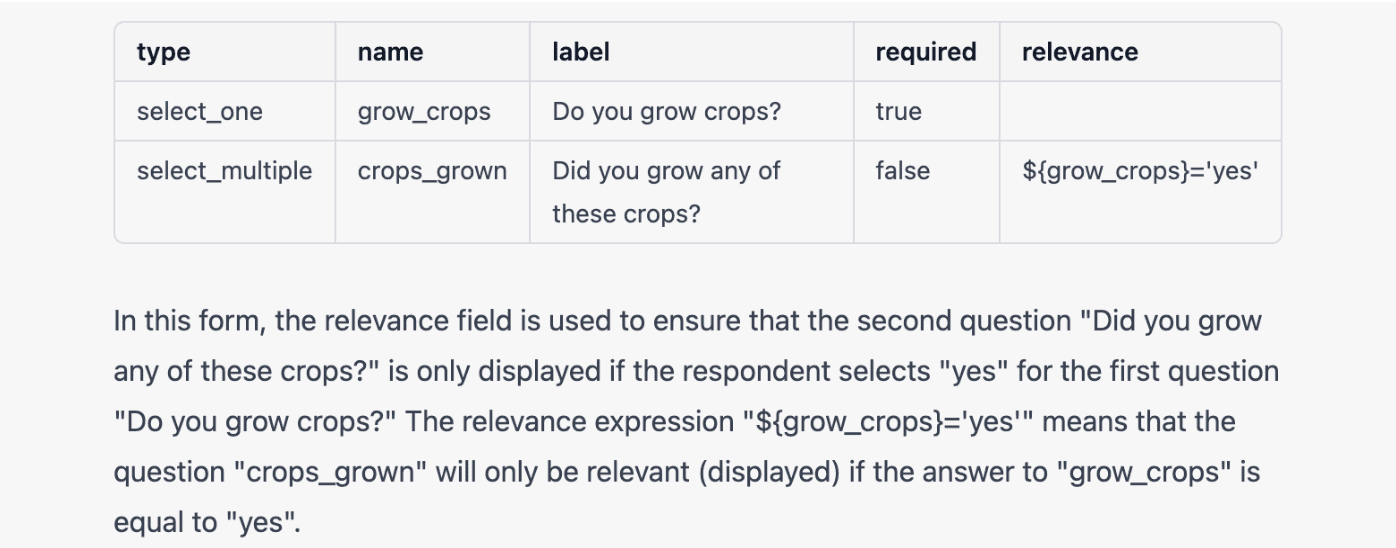

We started with a straightforward request. Could ChatGPT successfully show an example of relevance in a SurveyCTO form? Here are the screenshots of our conversation with the chatbot:

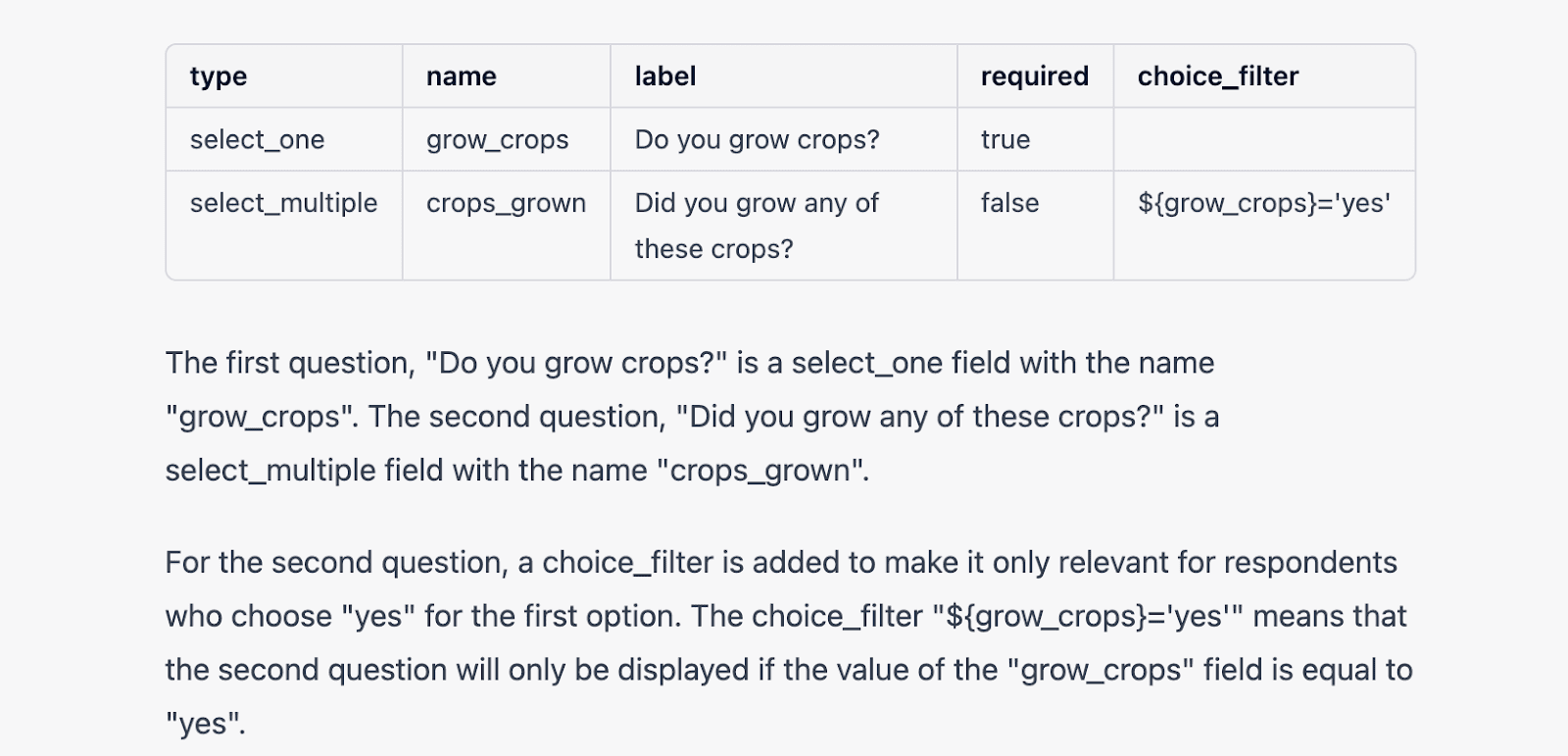

If you’ve programmed surveys with SurveyCTO, you’ll recognize that this first attempt from ChatGPT wasn’t quite right. The relevance expression was located in the choice_filter column, instead of the relevance column where it belongs. Further conversation was needed!

This time, ChatGPT was much closer at showing how to program relevance for Question 2 — though you will notice that no choice list name was provided under the type column, so that would still need to be added to the final form. It also made assumptions about whether or not a field was required (nothing about this was mentioned in the prompts).

Test #2: Constraints

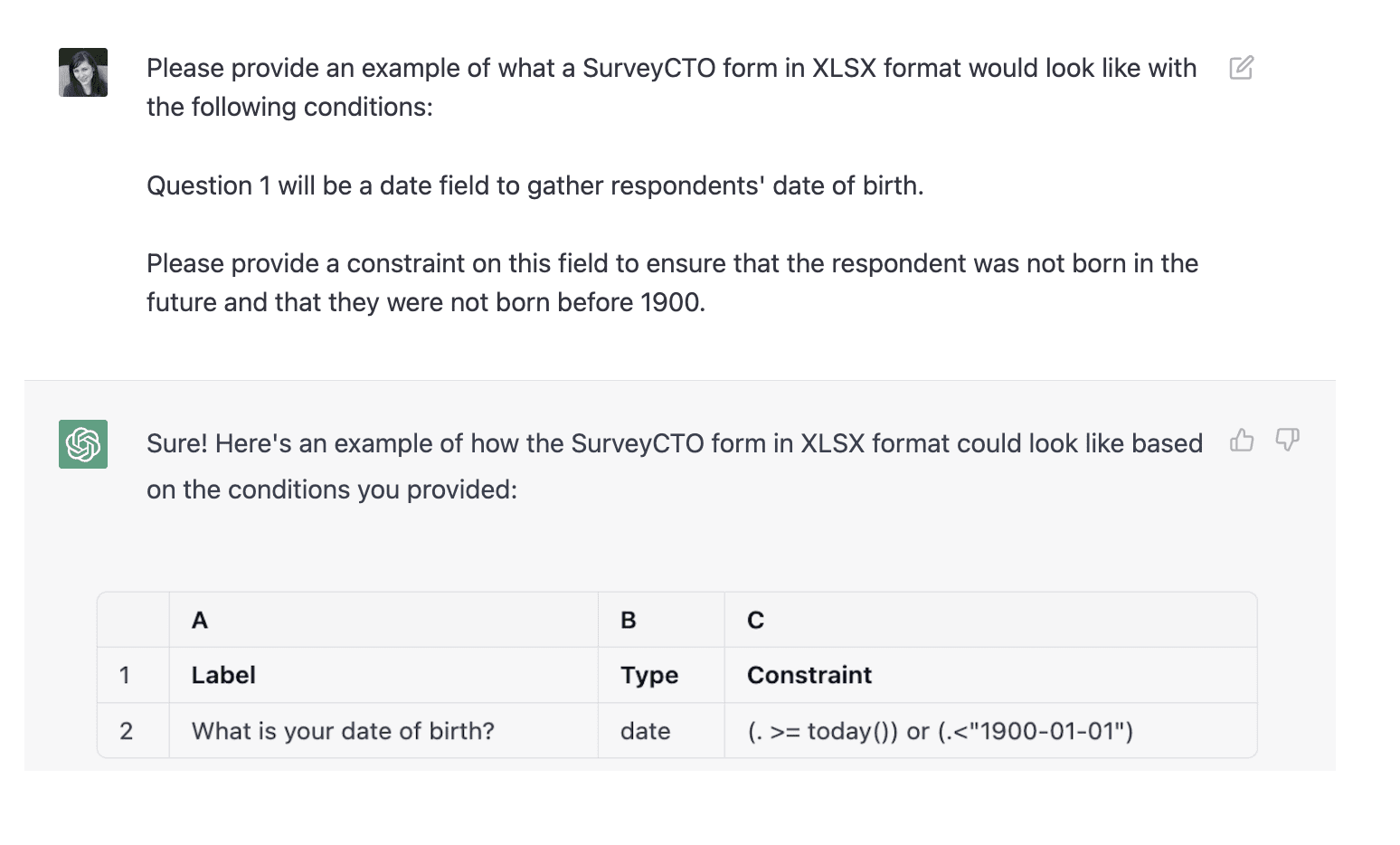

We then tested ChatGPT on another concept in form programming: Constraints. In SurveyCTO, a constraint limits the answers respondents can provide in a survey question.

There are many different types of constraints, but one of the most important (and commonly used) are constraints for integer and date fields. We chose to use a date field constraint for this test.

This first attempt, again, had some problems. The expression was missing some key characters, the form sample was missing the name column, and its logic, using “or,” wasn’t going to constrain respondents’ choices the way we needed.

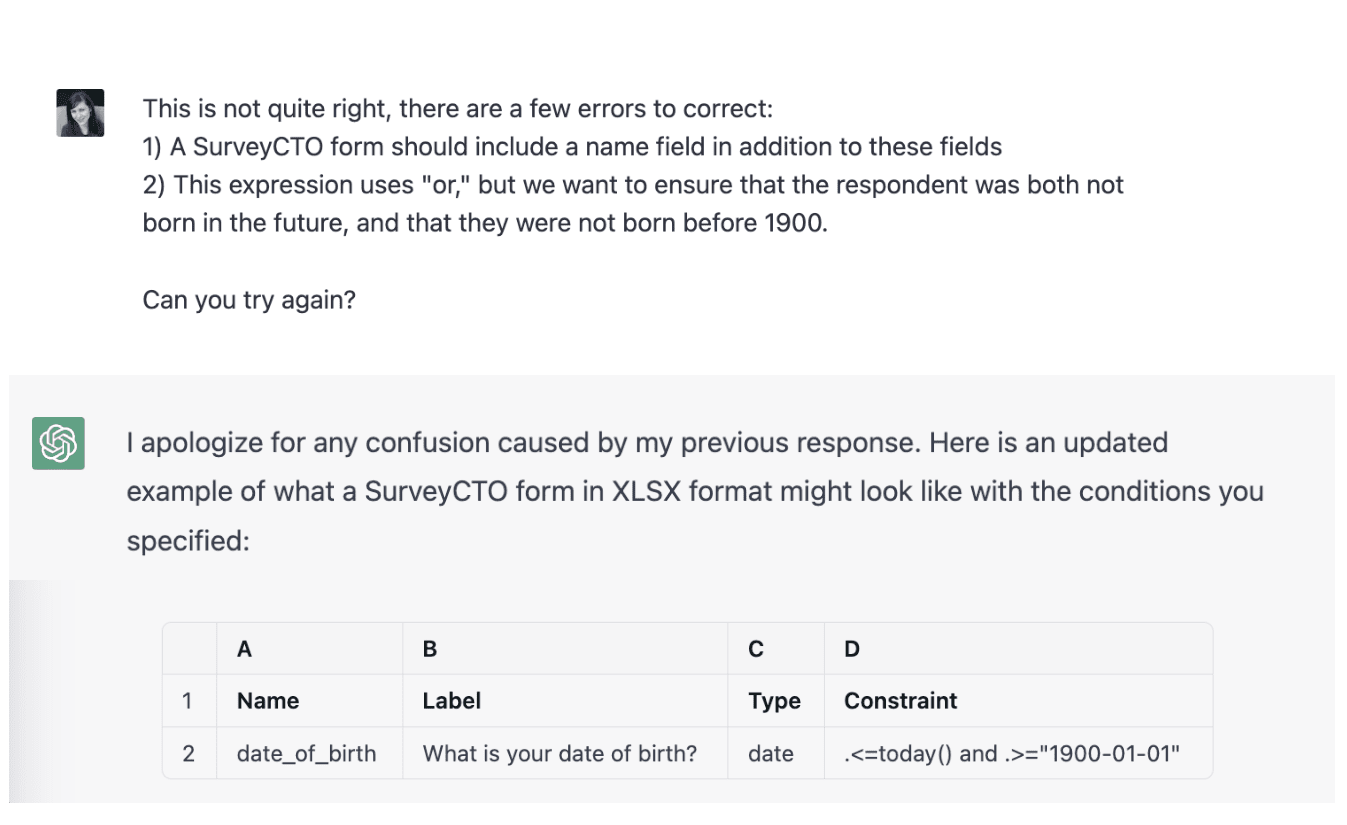

We decided to iterate further with ChatGPT:

At this point, we determined that this was close enough to a working constraint expression that it would be easier to edit from here than continue discussing with ChatGPT. We edited the expression in the image above to this final working expression, adding brackets as needed, the date function, and changing the double quotes to single to match SurveyCTO form programming conventions:

(.<= today()) and (.>= date('1900-01-01'))Can ChatGPT be used in survey design?

While ChatGPT seems to have its limitations with programming pre-designed surveys, we also considered how it might be useful in another way: actual survey design.

Creating good survey questions is an important part of collecting high-quality data. Flawless programming is critical, of course, but ultimately the success of a survey depends on the quality of the questions themselves.

First, we asked ChatGPT to create a 5-question health survey following the birth of a baby.

These were the questions it created:

-

- How have you been feeling physically since giving birth?

- Have you been able to get enough sleep since your baby was born?

- Have you been experiencing any postpartum mood changes or emotional difficulties?

- Have you been able to manage any physical discomforts or pains related to your delivery?

- Have you had access to appropriate medical care and support for your postpartum recovery?

While these are reasonable questions to ask following a birth, we could see that ChatGPT’s questions were quite simple. The bot did not demonstrate much consideration of the overall design of these questions.

For example, in a real survey, some of these questions could benefit from a direct follow-up question, such as “Please explain the mood changes or emotional difficulties you have been experiencing,” which would provide richer, more actionable data for community health workers or researchers.

To test further, we asked ChatGPT for one more full survey — complete with response options for each question.

Create 5 survey questions asking about maize farmers’ experiences from last season’s harvest that includes at least two response options for each question.

ChatGPT came up with this survey:

1. How would you rate the overall yield from your maize farm last season?

* Excellent

* Good

* Average

* Poor

* Very Poor

2. What factors do you believe contributed to the yield from last season's harvest?

* Adequate rainfall

* Good seed selection

* Efficient use of fertilizers

* Effective pest management

* Other (please specify)

3. How satisfied are you with the market price of maize last season?

* Very satisfied

* Satisfied

* Neutral

* Dissatisfied

* Very dissatisfied

4. Were you able to sell all of your maize from last season's harvest?

* Yes

* No

5. How did you transport your maize to market last season?

* Personal vehicle

* Hired transportation

* Cooperative transportation

* Other (please specify)

How helpful the above set of questions are will depend on your data collection goals, structure, and your organization’s best practices.

Again, there’s no follow-up per question, no skip logic/relevance or choice filtering built into the structure of these questions. Each question essentially acts as a stand-alone, which is often not how real surveys are designed. Having said that, these questions could be a decent start for a survey; the questions are reasonable and the choice lists are quite good (Q2’s in particular).

To get choice lists that would be better tailored to the needs of the real people you planned to interview, you would need further conversations with ChatGPT.

The verdict

Our brief investigation shows that ChatGPT has some programming capacity and potential uses in survey design. However, we’d recommend proceeding with caution.

We’re optimistic about ChatGPT, and all the innovation that similar AI tools can bring to survey programming and design in the future. However, we want to be clear: This tool has limitations for SurveyCTO users and is not currently capable of fully programming SurveyCTO forms, or even most survey questions, on its own.

For form programming, careful iteration is needed to get close to an accurate result, and that requires existing knowledge of SurveyCTO form programming. Based on our experiment, ChatGPT could be challenging to use for very new SurveyCTO users who may have difficulty distinguishing between correct and incorrect form programming.

Throughout our experiments, ChatGPT made sure to inform us several times that it is “a text-based bot, and is not capable of programming forms.” With that in mind, we discovered it’s best to ask ChatGPT for an example of a form when using it for form programming help. Ultimately, always remember that ChatGPT needs careful human oversight for any SurveyCTO form programming, which makes sense given what it was designed for.

What about survey design? There may be more potential here. As a language-based tool, this is more inside ChatGPT’s domain. Again, the process will still be a conversation with ChatGPT, and you’ll then need to thoughtfully edit the suggested survey questions. The amount of efficiency that ChatGPT can add here will depend on your workflow and particular data collection needs.

In our opinion, ChatGPT seems, at least for now, best-suited as a supplementary tool for those willing to spend time conversing with it to get the best results. For example, asking ChatGPT to help generate survey questions as a way of jump-starting your own thought process and creativity could be a great use. Getting started is often the hardest part, and this can be especially true if you’ve got survey goals that are broader, and/or if you’re newer to survey data collection.

With form programming, if you’re comfortable enough with SurveyCTO to recognize when something is missing or incorrect from an expression, and you have the time and ability to carefully test ChatGPT’s suggestions, it could again prove useful as a way to get started and give you ideas. If hands-on learning through trial and error works well for you, testing out ChatGPT’s suggested programming, editing it yourself, and testing again could be useful – or at least fun! It may also be useful in helping if you get stuck on programming, by providing alternative expressions. And, if you want to improve your ability to write regular expressions for use with the regex() function in SurveyCTO, ChatGPT can partner with you to help by creating new expression suggestions.

The do’s and don’ts for using ChatGPT with SurveyCTO

We know the above is a lot to consider! Here’s a quick summary of do’s and don’ts for using ChatGPT with SurveyCTO:

-

- Don’t expect correct form programming from your first prompt. It’s likely you’ll likely need to go back and forth with ChatGPT before they get the answer right the first time.

- Do carefully test every suggested line of programming from ChatGPT.

- Do plan on carefully reviewing any survey questions designed by ChatGPT to get them to meet your data collection needs and standards.

- Don’t forget to make use of other resources, too! If you get stuck on form programming, remember: With a paid subscription, you have access to 24/7 assistance from the Helpdesk, and have a great number of support center resources at your fingertips.

- Don’t expect correct form programming from your first prompt. It’s likely you’ll likely need to go back and forth with ChatGPT before they get the answer right the first time.

What do you think?

Do you have examples of successful uses of ChatGPT, or other AI tools for designing forms and collecting survey data? Be sure to reach out to us, we’d love to hear from you. You can get in touch with the author, Melissa Kuenzi, at melissa@surveycto.com.

For help programming and designing your surveys try out SurveyCTO. Start a free 15-day trial today or request a demo.

Lindo Simelane

Digital Analytics Associate

Lindo is a part of the marketing team at Dobility, the company that powers SurveyCTO. He is responsible for all aspects of data management and analytics for SurveyCTO’s website, social media, and other marketing efforts.

Lindo has a passion for research to inform policy in international development and economics. He has extensive experience in the ICT4D space that includes research work, and a background in international business and finance.