The next chapter in SurveyCTO’s story

From the beginning, we’ve been a bootstrapped, user-funded social enterprise committed to a few powerful ideas: Data quality and security should be central to social impact work, and investing in the best digital tools is critical to the success of social impact programs. For over a decade, we’ve been pursuing this mission by building features and functionalities to help users collect their best data ever. Whether you work in monitoring & evaluation, operate programs for an NGO, run a survey firm, or conduct academic research, we’re confident that using SurveyCTO can help make your data collection more streamlined and hone your practices over time.

To that end, we’re delighted to announce the launch of a brand-new tool on our website: The SurveyCTO AI Assistant. The Assistant is an Artificial Intelligence (AI) chatbot designed for and trained on SurveyCTO’s documentation, website, and Support Center, and it’s here to help you level up your data collection work like never before.

Why an AI Assistant?

You don’t need us to tell you that there’s an AI revolution happening right now. It entered the public consciousness with the launch of ChatGPT by OpenAI in 2022. Even before OpenAI took the world by storm, the team at SurveyCTO had been intrigued by the possibilities that artificial intelligence and machine learning could offer to data collection professionals. We know that data collection is multidisciplinary and requires a wide range of skills, from knowledge of the subject and population being studied to hard skills in research methodologies. For people working in the social impact space, the constraints of time, expertise, and budget can unfortunately have a quality-lowering effect on their research and data collection. We saw great potential for AI to fill some of the gaps caused by these challenges, and we decided to take action.

Over the past few years, Dobility has been involved both as an early investor and a collaborator in experimental AI research and development (R&D) work with Higher Bar AI, PBC (HBAI), a public benefit corporation started by our founder, Dr. Christopher Robert. We’ve explored ways that AI technologies like chatbots can help researchers incorporate rigorous best practices into their work, ultimately raising the bar for data collection quality.

"I think that AI gives us the opportunity to codify best practices in a way that helps us to implement them both more frequently and more faithfully, which can help us to improve the overall quality of our work."

– Dr. Christopher Robert

Through this R&D work, we came to understand that while chatbots could be extremely useful, the mere existence of an AI Assistant for data collection wouldn’t guarantee an increase in data quality. AI is only as smart as the data it is trained on, so a potential pitfall of AI Assistants is that the answers and advice they offer can be of average-level quality, leading to an averaging of overall practices instead of an improvement. And average answers weren’t going to raise the bar for SurveyCTO users.

To get a genuinely helpful chatbot for survey research, we engaged in an intensive evaluation process that went beyond the usual ad hoc testing given to most LLMs. Our team developed a detailed, automated system for evaluating the Assistant’s performance. This included defining “test sets” of conversation logs, re-running select test conversations, and then comparing the AI’s responses and evaluating them in a rigorous way against set measures of quality. The team also focused on evaluating and re-running multi-turn conversations that reflected the way that real people actually talk to chatbots. Finally, the Assistant’s output was evaluated both by real humans, and other LLMs.

Ultimately, we knew that SurveyCTO users would want (and deserve!) something exceptional when it came to AI. We only wanted to roll out a SurveyCTO chatbot if it could significantly help survey researchers, NGOs, and governments raise the bar on their data collection work, address common challenges, and maximize their impact. That’s why we put such rigorous work into the SurveyCTO Assistant, and why it’s different from other AI assistants. It’s not only here to help make work a little easier or faster, it’s here to up your game.

Here’s a few more ways the SurveyCTO Assistant stands out from the chatbot crowd:

- Instead of using a pre-built chatbot platform like OpenAI’s GPT or Microsoft’s Copilot Studio, the SurveyCTO Assistant is custom-built, created by leveraging the LangChain library, and drawing on experience in hosting on AWS and using Microsoft’s Azure AI Search solution.

- It is trained on SurveyCTO’s entire body of content, from product documentation to our extensive, expert support center library to our blog and website.

- It has the ability to not only respond to prompts, but to review survey forms and offer suggestions.

- The Assistant cites its sources and points you to further resources for more learning (not all chatbots do this).

- The quality of SurveyCTO Assistant answers reflect this rigorous process in their clarity, quality, and comprehensiveness (as you’ll see below!).

Learn more about the creation of the SurveyCTO Assistant here: Beating the Mean: Beyond POCS. If you’re interested in pursuing similar projects, there is now an open-source, AI-powered survey instrument evaluation toolkit from HBAI available on Github.

How to use the SurveyCTO Assistant

You can easily open and interact with the Assistant here on our website. Please note that the Assistant does not appear within the SurveyCTO platform itself, and has no access to users’ accounts or data.

To interact with the Assistant, you’ll want to ask it questions, or give it prompts. You can ask questions about how to use SurveyCTO, as well as questions about data collection and survey research best practices.

Want to get started? Here’s some suggested prompts. This list is not exhaustive, but should give you some ideas of how to achieve different objectives with the Assistant, such as:

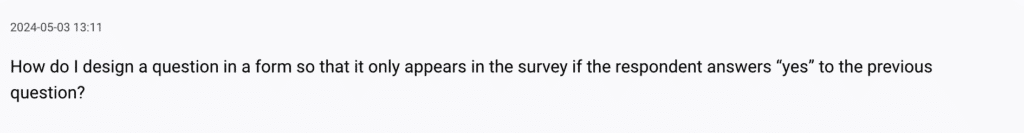

Programming forms.

Sample prompt: How do I design a question in a form so that it only appears in the survey if the respondent answers “yes” to the previous question?- Troubleshooting.

Sample prompt: What is wrong with the following form programming expression?

(selected(${group1_test), ‘other’)

Put in any expression that isn’t working as you want or expect to discover the issue. - Improving research and project designs.

Sample prompt: I am measuring the effects of having received at least 3 years of childhood education on women’s income in a rural community in India, to see if even limited education can help improve employment opportunities and increase financial stability. Can you provide 2 or 3 steps I should take to build my sample? - Honing survey questions.

Sample prompt: I plan to conduct cognitive interviews to identify potential phrasing issues in my survey questions. Please predict the phrasing issues I will identify in the cognitive interviews.

Survey context: Surveying mothers in rural South Carolina after they have given birth.

Survey question: Have you regularly monitored your blood pressure at home or outside of a healthcare visit during any of the following time periods?

1. Before my most recent pregnancy?

2. During my most recent pregnancy?

3. Since my new baby was born? - Get a form review:

Want to get your survey form evaluated by the Assistant? Need to know the best way to incorporate a new question, or running into errors? Use the paperclip icon to upload an XLSX version of a form and ask the Assistant about it.

Sample prompt: “I’m having a problem with the relevance expression for the group, “identifying_information” in the form I’ve uploaded. I only want it to be relevant if the respondent’s household size is greater than 2. Please review the attached form and let me know how I can program my form to achieve this.“

Real-life examples from using the Assistant

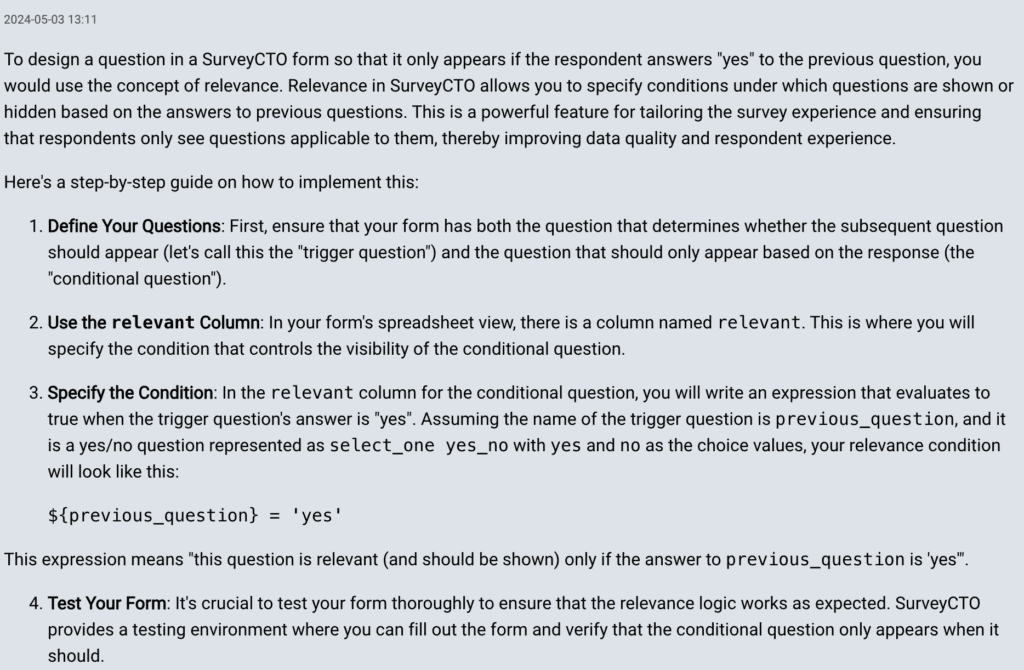

To get an idea of what SurveyCTO Assistant responses look like, here’s what happened when we gave it example prompt #1 from above:

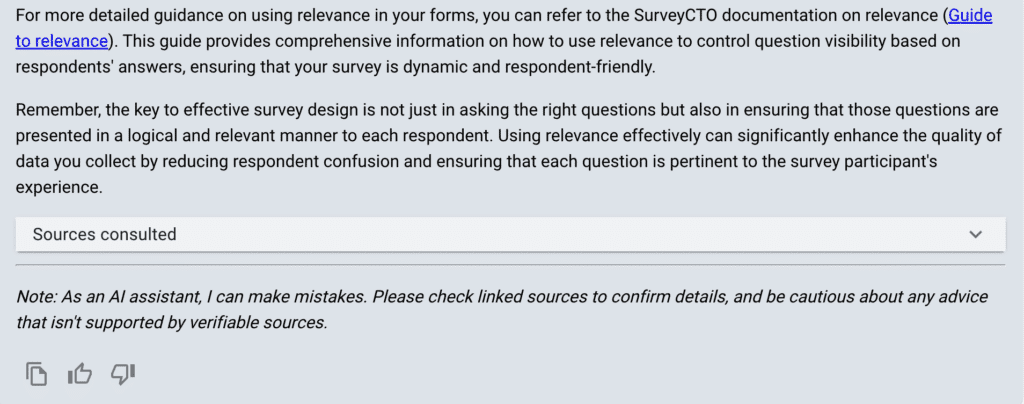

Here, the Assistant gives an overview of the concept of relevance, then provides detailed steps for you to implement relevance. Finally, it directs you to further resources on the topic so you can learn more!

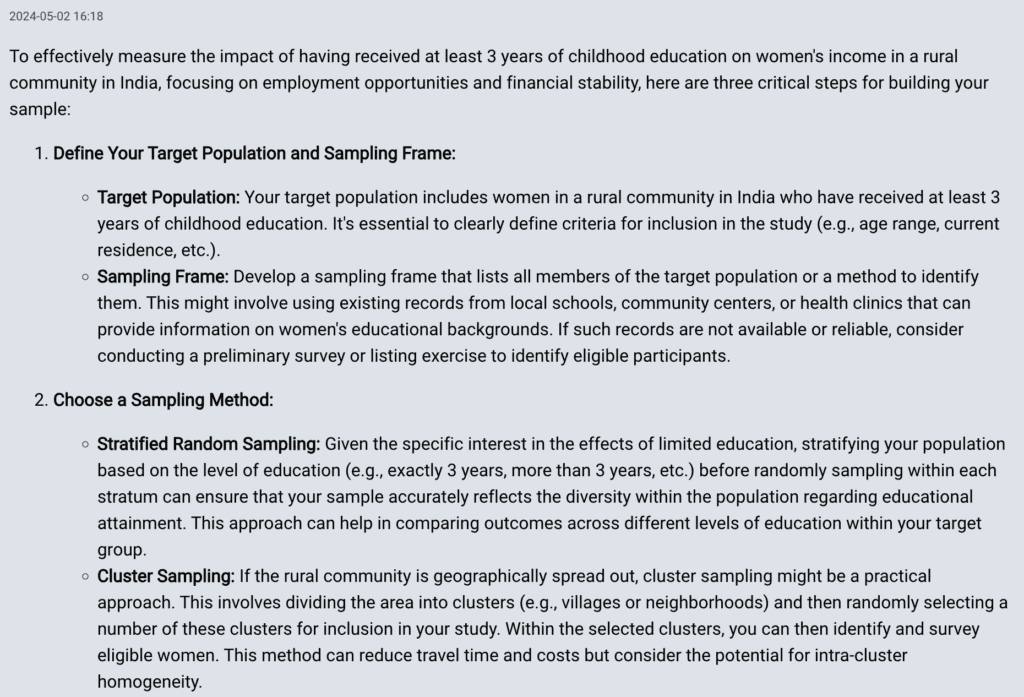

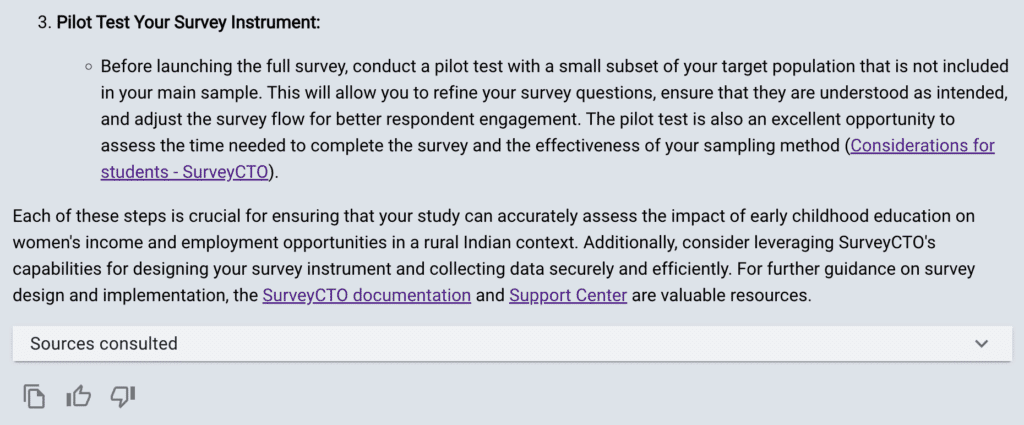

Are you a more experienced survey designer looking for more nuanced data collection advice? Check out the Assistant’s response to prompt #3:

Here, the AI Assistant identified steps to define a target population and sampling frame, choose a sample method, and recommended pilot testing as a way to further improve your data collection instrument. Beyond that, this more advanced prompt provided a response that went beyond a basic answer by sharing additional best practices to consider. For example, the suggestions in “stratified random sampling” added nuance to the prompter’s original sample, illuminating the critical point that the original sample would include both women with “only three years of schooling” and those with “three or more years of schooling.” Those categories are actually pretty different–and by reading through the Assistant’s response, it became clear that this sample could be even better defined for best results.

Come meet the SurveyCTO AI Assistant

By simply providing the Assistant with a solid prompt, you and your organization can be well on your way to even better data quality in no time! Ready to get started? Open up the Assistant and start typing in your questions around SurveyCTO features and research techniques, or upload an XLSX form for review.

A few things to keep in mind as you start exploring:

- Keeping it real: The Assistant isn’t perfect. It is still learning! You should double-check linked sources, and be sure to thoroughly test all solutions that the Assistant provides. If you see something that seems incorrect or unhelpful from the Assistant, please let us know.

- The Assistant is here to answer all kinds of questions, but not to solve issues with your SurveyCTO account, or deal with time-sensitive problems you might encounter during fieldwork. Please also be aware that we are not monitoring your questions to the Assistant. If you run into a SurveyCTO Collect error during data collection, or you encounter any type of problem with your SurveyCTO workflow, contact us through the Support Center so that our Customer Support experts can help you.

- The Assistant is new, and we’re constantly working to improve it! Have questions or ideas about it? Get in touch at info@surveycto.com.